CES 2026

NVIDIA vs. AMD and Key Takeaways for Your Investment Portfolio

“CES gathers the global tech ecosystem for significant deal-making, partnerships, and idea-sharing, serving as the stage where ideas become reality”

— Kinsey Fabrizio, President of CTA

Executive Summary

After watching some content from CES 2026 (Consumer Electronics Show), I’ve decided to give a quick update since I’m guessing most of y’all don’t want to sit and watch every video, synthesize the new announcements / technology and decide what’s next in AI.

One thing is for sure. Artificial Intelligence has evolved past a ‘venture-capital’ wager, and is confidently going to become foundational infrastructure in our economy and every corporate ecosystem.

—> That’s obvious.

For us investors, I think the question should now be how to position ourselves across layers of the value chain to maximize returns while managing the concentration risk in the upstream infrastructure layer, which is currently dominated by players like Nvidia, AMD, Micron, TSMC, etc.

While exploring these alternative AI opportunities, it’s important to remember that companies like Nvidia and AMD still own the foundation of the compute economy, and their technological innovation ripples through the ecosystem, influencing all AI innovation.

In this article, we’ll explore the innovation battle between infrastructure giants Nvidia and AMD, while also laying out their future AI hardware and software discussed at this conference.

Let’s dive in.

Nvidia GPUs to Full AI Stack Platforms

Let’s start with the obvious — Nvidia.

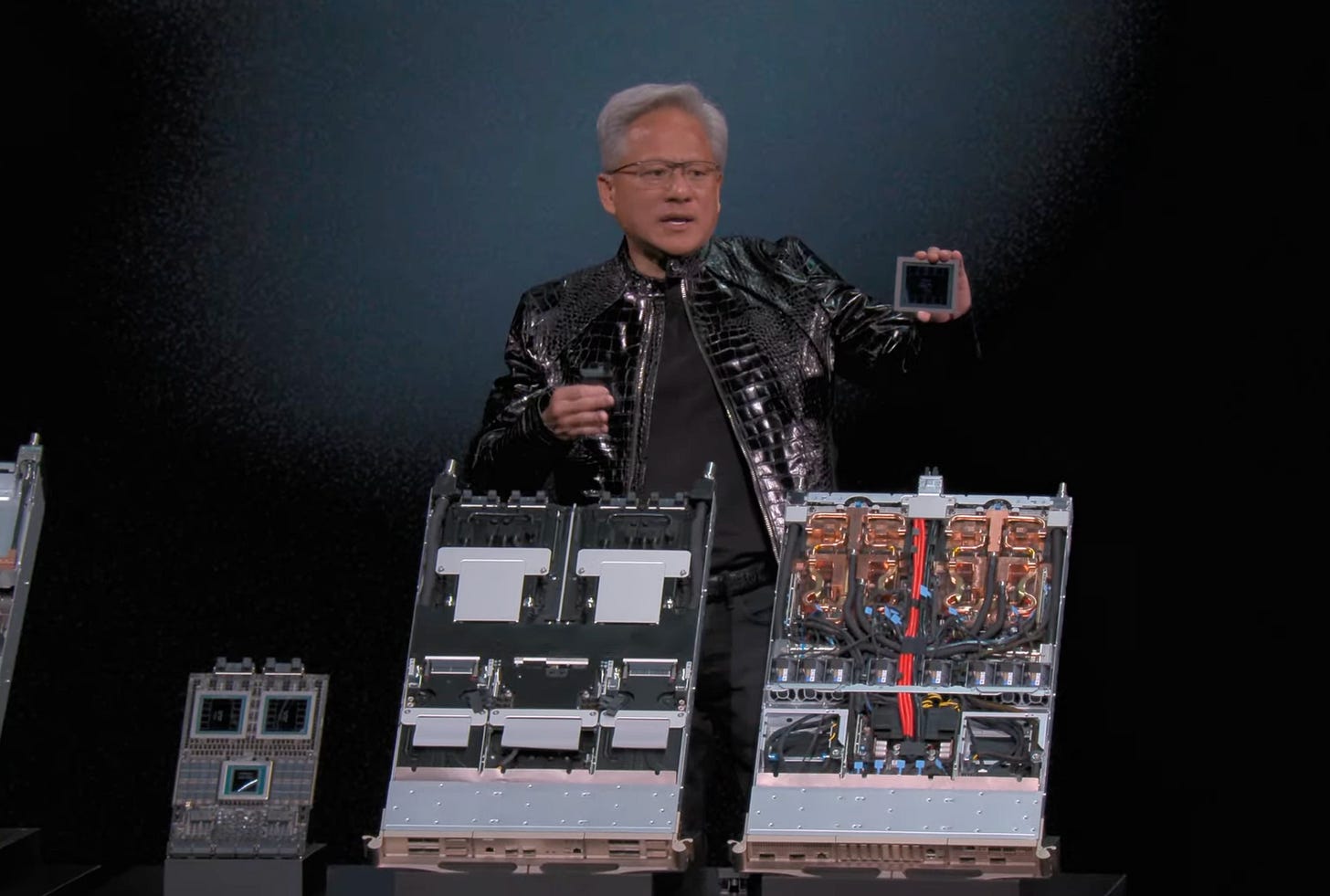

Jensen first unveiled the Vera Rubin platform: a rack-scale, next-generation AI supercomputing architecture that integrates CPUs, GPUs, networking and DPUs designed for both training and inference workloads at massive scale.

This was actually not a typical, next generation chip refresh. First off, this Rubin unveil was shown months earlier than usual, signaling accelerating demand for larger and more complex AI models.

The Rubin platform aims to make the world's most advanced AI accessible and affordable, supporting the next wave of AI development for the MAG7 and major cloud providers / enterprises.

More specifically, the platform achieves a ~10x reduction in inference token costs and requires ~4x fewer GPUs for training, compared to the Blackwell predecessor.

When I first read this, I was confused that Nvidia did this, as this would likely cannibalize their own chip sales?

However, from NVIDIA’s perspective, this is a calculated move to prevent market stagnation and maintain their monopoly. If this doesn’t make sense, let’s try to understand it from how Jensen framed it on stage… through an analogy / theory.

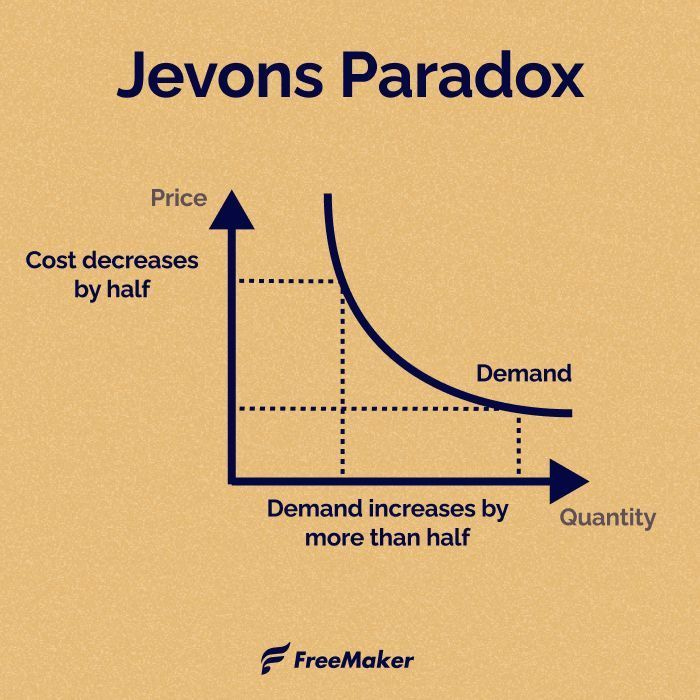

Jensen is betting on the phenomenon called the Jevons Paradox:

“As technological progress increases, the efficiency with which a resource is used, and the rate of consumption of that resource actually rises, because it becomes cheaper and more useful”

This causes demand to increase, leading to increased resource consumption.

So the logic here is that if a token costs 1/10th as much to produce (via the Rubin platform), developers won't just pocket the savings but rather build and utilize models that are 100x more efficient.

Agentic AI and reasoning heavy models require a massive amount of tokens to operate, and Nvidia is making this more financially viable while also betting that this will lead to more chip demand when compared to the cost.

In addition to the new Rubin platform, Nvidia is making strides on the consumer front.

The chipmaker announced partnerships with consumer electronics manufacturers to embed its AI processing capabilities into laptops, tablets, and even home entertainment systems.

This strategic expansion matters because Nvidia's extraordinary valuation—trading at roughly 35x forward earnings—depends on the company finding new growth avenues beyond its core business.

The data center segment, whilst still growing at impressive double-digit rates, cannot indefinitely sustain the explosive revenue increases that have propelled Nvidia's share price upward by more than 240/cent over the past two years.

— Winvesta

This illustrates Nvidia is starting to move beyond its dominance in data centers to bring AI into the consumer market, which is a market worth ~$150 billion.

AMD’s Yotta-Scale Vision & Broader AI Compute Strategy

AMD responded with its own vision, although it wasn’t taken as well by investors, as the stock slightly decreased after the conference.

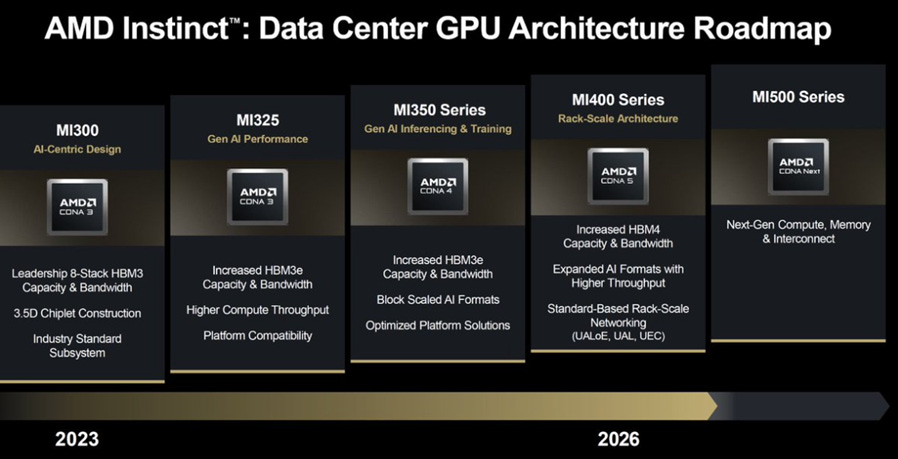

AMDs stage announcements included the upcoming MI400 series, the Helios rack-scale platform, new Ryzen AI processors, an updated gaming CPU and software developments.

First, I want to respond to Nvidia’s development’s with AMD’s new technology, as both advancements seem similar, but I want to make the distinctions clear.

NVIDIA’s pivot to extreme token efficiency with the Rubin platform is surely a competitive move and something AMD has taken notice to.

Nvidia is trying to convert the AI infrastructure demand market from a ‘chip demand’ model (where you pay for whatever chips you can get) to more of a ‘system utility’ model (where you only buy the most efficient system) .

While Nvidia’s GPUs are more token efficient, AMD’s Instinct MI455X (also debuted at CES 2026) boasts a massive 432GB of HBM4 (which is memory!).

While NVIDIA is winning on compute efficiency (petaflops per token), AMD is competing on model fit.

Larger memory on-chip allows AMD to fit massive, multi-trillion parameter models / ultra-long context windows onto fewer GPUs

For customers like OpenAI who want to avoid complete ecosystem dominance by Nvidia, keeping models on fewer chips can sometimes save more money

Nvidia’s token efficiency is also a byproduct of their new NVLink 6, which is a technology that helps a rack’s components (GPU, CPU, accelerator, DPU, etc.) compute more efficiently, in lockstep.

AMD uses a more general, open-source alternative called UALink. If they can integrate this elegantly within their new Helios rack, this will be a hyper-lucrative opportunity for the business and allow them to compete on par with Nvidia.

Now, let’s briefly discuss the AMD developments.

While the market’s reaction to AMD’s keynote was a bit ‘meh’ (reflected in that slight stock dip), I’m slightly more bullish than others coming out of CES.

While we’ve already reference the MI455x and Helios platform, these were main features of the presentation from Lisa Su.

The Helios platform introduced “Yotta-scale” compute (this is just a lot of compute / performance. Trust me, I don’t understand how much this actually is lol) — which features 72 Instinct MI455X GPUs, the new EPYC ‘Venice’ CPUs and Pensando ‘Vulcano’ NICs for networking.

This thing delivers up to 3 AI exaflops in a single rack. I looked this up, and apparently to put that in perspective, it weighs nearly 7,000 pounds (weight of two compact cars!).

I think a multitude of enterprise customers will want Helios since it’s an open source rack and they’ll want to avoid the walled garden of Nvidia.

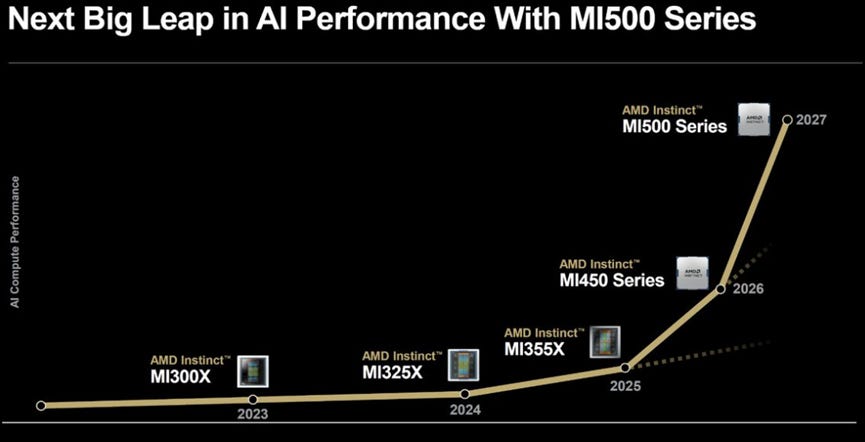

AMD also unveiled their MI400 and MI500 series in more detail, which can be referenced in the two images below:

One crazy stat I have to mention here on the MI500 series.

Lisa was claiming a 1,000x performance increase over the MI300X from 2023. It’s a bold claim, but it shows AMD isn't slowing down their R&D spend.

AMD also headlined their Ryzen AI 400 and Halo MAX+ series.

The Halo series processors were the dark horse of the show in my opinion (we already knew about Helios and the MI400 series) — it’s essentially a compact mini-PC developer platform designed for building, testing and running large models locally, without relying on cloud infrastructure!

This shows AMD is firing on all cylinders on the developer / software side as well.

I truly believe AMD is truly lowering the barrier to entry for anyone who wants to build AI without getting permanently stuck in the Nvidia ecosystem.

The Bottom Line of AMD vs. NVIDIA

Its a duopoly.

At this point, there’s no denying that.

Nvidia still owns ~90% of the data center compute market for AI and GPU compute, but market data from early 2026 suggests AMD has successfully grown its data center market share to roughly 10% (up from ~5% in 2024).

But Nvidia wants the whole stack. They build the chips, the racks, the networking and the software. It’s a closed-source walled garden that offers the highest token efficiency in the world.

If you believe the Jevons Paradox will drive infinite demand for the fastest possible compute, and Nvidia will capitalize on this, then Nvidia could be your best bet in your portfolio.

AMD is the open-source alternative. By doubling down on UALink and massive on-chip memory, they are the freedom play for hyperscalers who don't want to be indebted to Jensen’s pricing.

If you believe the market will eventually revolt against proprietary systems, move toward inference-at-scale, and value a full stack ecosystem with GPUs, CPUs, DPUs, NPUs, FPGAs and more, AMD is the clear winner, as they offer the broadest, open-source portfolio of compute.

There are many other factors in play here such as pricing power, financial dynamics, future partnerships, speed of ROCm software adoption (if at all) and others.

So…

Nvidia and AMD aren’t just competing on chips, but they’re competing on philosophies for how the AI compute economy gets built.

CES 2026 made one thing clear: AI is about who controls the rails of computation.

From here, for us investors, it’s less about predictions and more about tracking how these competing approaches shape the pace, cost and direction of AI adoption over time.

Disclaimer: The information provided in this publication is for informational and educational purposes only and does not constitute investment, financial, or other professional advice. ThePrivatePublicInvestor and its authors are not registered investment advisors or broker-dealers. All opinions expressed reflect personal views as of the date published and are subject to change without notice. While efforts are made to ensure accuracy, no guarantee of completeness or reliability is given. Past performance is not indicative of future results. The author may hold positions in securities discussed. Use of this content is at your own risk.

“While the market’s reaction to AMD’s keynote was a bit ‘meh’ (reflected in that slight stock dip), I’m slightly more bullish than others coming out of CES.”

appreciate the candidness and perspective here. i agree in this case :) nice article! looking for the ces stuff!

Insightful, thanks for this deep dive.